Consultant fined $6 million for AI calls mimicking Biden

The Federal Communications Commission has fined a political consultant $6 million for robocalls to voters that used artificial intelligence to mimic the voice of President Joe Biden ahead of the New Hampshire presidential primary.

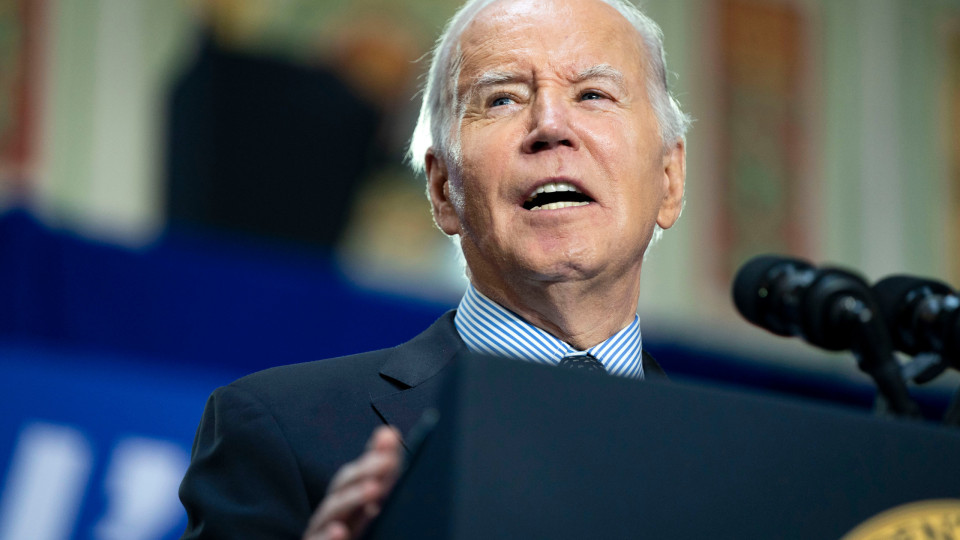

© Bonnie Cash/UPI/Bloomberg via Getty Images

Mundo EUA

Steve Kramer, who also faces 24 criminal charges in New Hampshire, has admitted creating a robocall that went to thousands of voters two days before the nation’s first primary on Jan. 23.

The message featured an artificial intelligence-generated voice that sounded like Biden, using his signature phrase “What a bunch of malarkey,” and falsely suggested that voting in the primary would prevent people from voting in the November general election.

In addition to the $6 million fine, court documents say Kramer faces 13 felony charges alleging that he violated a New Hampshire law against trying to discourage someone from voting using deceptive information.

The political consultant also faces 11 misdemeanor charges that allege he falsely represented himself or caused someone else to falsely represent him as a candidate.

The charges were filed in four counties, but as is often the case with felonies, they will be prosecuted by the state attorney general’s office.

Kramer did not immediately respond to a request for comment Tuesday from The Associated Press, but he has previously said he was trying to draw attention to the dangers of artificial intelligence.

The Federal Communications Commission also issued a $2 million fine to Lingo Telecom, a telecommunications company that it says transmitted the calls. A company spokesperson did not immediately respond to a request for comment Tuesday from the AP.

FCC Chair Jessica Rosenworcel said regulators are committed to helping states go after the bad actors. In a statement, she called such robocalls “maddening.”

“Because when the caller ID looks like a politician we know, a celebrity we admire, or a family member we cherish, any of us could be tricked into believing something that is not true by a robocall using AI-generated voices,” Rosenworcel said in the statement.

“That’s exactly how the bad actors behind these deceptive robocalls with manipulated voices want us to react,” she said.

Also Read: Biden names Kenya as ‘major non-NATO ally’ (Portuguese version)

PUB

Seguro de vida: Não está seguro da sua decisão? Transfira o seu seguro de vida e baixe a prestação

Descarregue a nossa App gratuita.

Oitavo ano consecutivo Escolha do Consumidor para Imprensa Online e eleito o produto do ano 2024.

* Estudo da e Netsonda, nov. e dez. 2023 produtodoano- pt.com